ACA Responds to Trump’s Reckless Nuclear Testing Remarks

On Thursday Oct. 30, U.S. President Donald Trump said he had “instructed the Department of War to start testing our Nuclear Weapons on an equal basis. That process will begin immediately.”

For Immediate Release: October 30, 2025

Media Contacts: Daryl Kimball, Executive Director (202-463-8270 x107), Xiaodon Liang, Senior Policy Analyst (x113)

(Washington, D.C.)— Last night, U.S. President Donald Trump said he had “instructed the Department of War to start testing our Nuclear Weapons on an equal basis. That process will begin immediately.”

In response, ACA Executive Director Daryl G. Kimball stated, “Trump appears to be misinformed and out of touch. The U.S. has no technical, military, or political reason to resume nuclear explosive testing for the first time since 1992, when a bipartisan majority of the U.S. Congress mandated a nuclear test moratorium. It would take, at least, 36 months to resume contained nuclear testing underground at the former Nevada Nuclear Test Site outside Las Vegas.”

The United States has conducted 1,030 nuclear test explosions since 1945, which is the majority of all 2,056 nuclear test explosions worldwide.

Brandon Williams, current NNSA administrator, said the following during his confirmation hearing earlier this year: "we collected more data than anyone else. And it is precisely that data that has underpinned our scientific basis for confirming the stockpile. I would not advise … testing.”

In Williams’ response to written questions from Congress, he said: "The United States continues to observe its 1992 nuclear test moratorium; and, since 1992, has assessed that the deployed nuclear stockpile remains safe, secure, and effective without nuclear explosive testing."

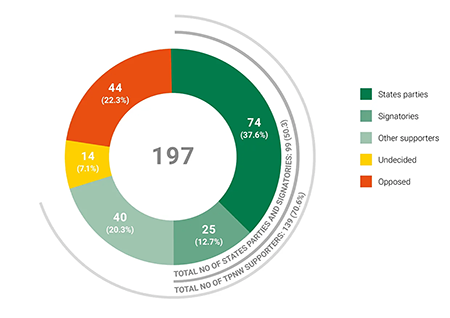

“Today, 187 states -- nearly all the world’s nations, including the five largest nuclear powers--have signed the 1996 Comprehensive Test Ban Treaty and as a signatory to the treaty, the United States is legally obligated to respect it. No country, except North Korea, has conducted a nuclear test explosion in this century, and even they have stopped.” Daryl Kimball said.

“By foolishly announcing his intention to resume nuclear testing, Trump will trigger strong international opposition that could unleash a chain reaction of nuclear testing by U.S. adversaries, and blow apart the nuclear Nonproliferation Treaty,” Kimball stressed.

Although Trump stated that he was ordering the resumption of testing, “because of other countries testing programs,” Moscow immediately denied any nuclear testing by Russia.

Kremlin spokesman Dmitry Peskov suggested that Trump was perhaps referring to announcements of the testing of Russia’s new nuclear-powered cruise missile Burevestnik and nuclear-capable super torpedo Poseidon, and told reporters “if somehow the Burevestnik tests are being implied, this is not a nuclear test.”

"Accusations that Russia and China may have conducted very low-yield but super-critical nuclear weapons tests are unsubstantiated and highly-debatable, and such tests provide little value for advancing the capabilities of their nuclear programs. Such concerns are far better dealt with by ratifying the CTBT and securing the option for short-notice on-site inspections and/or other forms of confidence-building measures,” Kimball said.

“Trump’s nuclear policy is incoherent and unclear: calling for denuclearization talks one day; threatening nuclear tests the next. But what is clear: U.S. resumption of nuclear testing or reckless words and actions, that trigger a nuclear testing chain reaction, harm U.S. security,” Kimball said.

_____

Additional Background Information: A list of resources on the Comprehensive Test Ban Treaty (CTBT), effects of nuclear weapons testing, and a recent civil society statement to the 14th Article XIV Conference on Facilitating Entry into Force of the CTBT are listed below:

- Nuclear Testing and Comprehensive Test Ban Treaty Timeline

- Comprehensive Test Ban Treaty at a Glance

- Nuclear Testing Tally 1945-2017

- Civil Society Statement for the 14th CTBT Article XIV Conference on Facilitating Entry Into Force, Sept. 26, 2025

- The Toxic and Radiological Legacy Panel Discussion at the ACA-Win Without War "From Trinity to Today" Event on July 10, 2025