"No one can solve this problem alone, but together we can change things for the better."

Keep Human Control Over New Weapons

April 2019

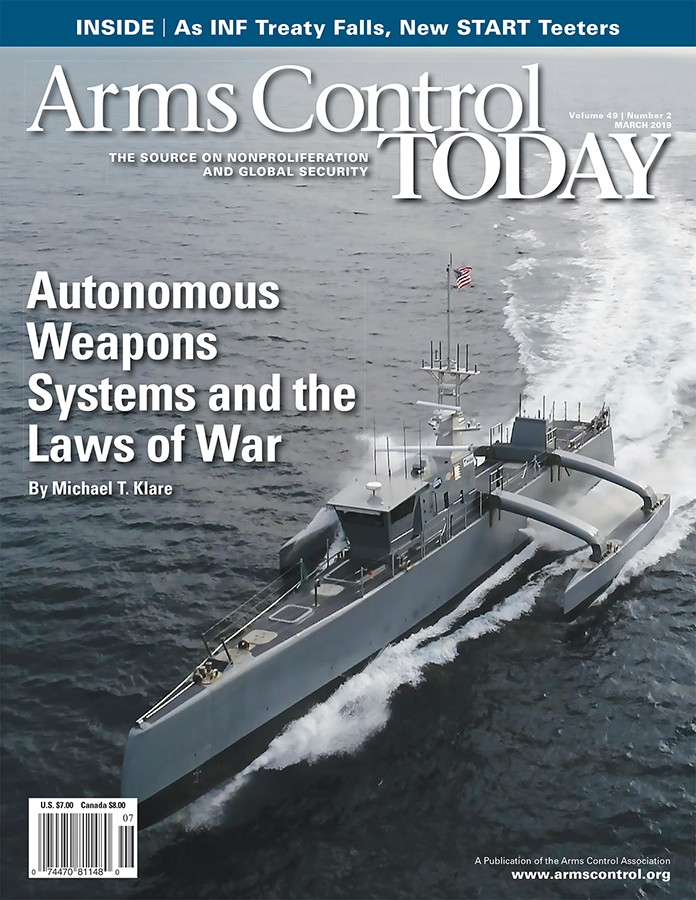

Michael T. Klare’s article “Autonomous Weapons Systems and the Laws of War” (ACT, March 2019) highlights important issues, but omits enormous strategic risks inhearent to the use of artificial intelligence (AI) in a role of command. In a war between major powers, both sides’ AI systems would be able to outperform humans in the application of game theory, similar to state-of-the-art poker-playing AI, which can now readily defeat the top human players. Nation-states would be tempted to endow these genius-level autonomous killing systems with the authority to escalate, bringing the risk of bad luck causing misunderstandings that

Michael T. Klare’s article “Autonomous Weapons Systems and the Laws of War” (ACT, March 2019) highlights important issues, but omits enormous strategic risks inhearent to the use of artificial intelligence (AI) in a role of command. In a war between major powers, both sides’ AI systems would be able to outperform humans in the application of game theory, similar to state-of-the-art poker-playing AI, which can now readily defeat the top human players. Nation-states would be tempted to endow these genius-level autonomous killing systems with the authority to escalate, bringing the risk of bad luck causing misunderstandings that

lead to uncontrolled escalation.

Exacerbating the risk of near-instant autonomous escalation is the risk of an AI system deciding that it, unlike a human, may have nothing to lose. A human commander might be a father or mother who might choose to put the preservation of the human race above nationalistic warmongering. Recall how Soviet air defense officer Stanislav Petrov (a father of two) saved the world in 1983 by choosing to disbelieve a computerized system that claimed a U.S. nuclear first strike was in progress. His decision may have included a desire to put mercy over patriotism and not to participate in the annihilation of the human race.

Unfortunately, an AI system would have no such qualms. An AI system might or might not be trained with a self-preservation instinct. If not, it would not care if it is destroyed, and it could take needless risks, carelessly bluffing and escalating, seeking to make the adversary back down through suicidal brinksmanship.

One might think, therefore, that it is important to endow battlefield AI systems with a self-preservation instinct, but the consequences of doing so could be even worse. Once a weaponized AI system is given a self-preservation instinct, it may become impossible to shut down, as it would kill anyone who tried to shut it off, including its own country’s military or government leaders.

These killings might play out in several ways. On a small scale, the system could limit its “self-defense” killings by targeting only the officials trying to disable the AI system. Worse, the AI system could try to preserve itself by threatening mutually assured destruction: if it ever suspected, even incorrectly, that its shutdown was imminent, the AI system could retaliate with the launch of nuclear weapons.

A dying AI system, still energized for a few milliseconds by the last electrical charge remaining in its internal capacitors, could even nuke not just its own nation but the entire surface of Earth.

Jonathan Rodriguez founded Vergence Labs in 2011, which developed computerized smartglasses. Vergence was acquired by Snap Inc., where he is now manager of new device prototyping.

Michael T. Klare’s article “Autonomous Weapons Systems and the Laws of War” (ACT, March 2019) provides an excellent overview of the risks of the military uses of autonomous weapons. We need some kind of international control to ensure that autonomous military technologies obey the laws of war and do not cause inadvertent escalation. The Convention on Certain Conventional Weapons (CCW) offers the best chance for meaningful regulation.

Klare identifies three strategies for regulating autonomous weapons: a CCW protocol banning autonomous weapons, a politically binding declaration requiring human control, and an ethical focus arguing that such weapons violate the laws of war. I would add two other strategies: a CCW protocol short of prohibition and a prohibition negotiated outside of the CCW, the way the 1997 Mine Ban Treaty grew out of CCW negotiations.

Of these five strategies, a new CCW protocol provides the best hope for mitigating the risks. While a total prohibition would be preferable, it might be more realistic to agree on a set of regulations, such as a requirement that a human operator always be available to take over. Anything short of a new, legally binding treaty would likely be too weak to influence the states most interested in deploying autonomous weapons, while a treaty negotiated outside the CCW would likely be too restrictive.

To prevent arms race that Klare describes, any method for controlling autonomous weapons will need the buy-in of the countries with the most advanced autonomous weapons programs, and they are currently very skeptical of any control. A politically binding declaration or an appeal to the Hague Convention’s Martens Clause might have normative power, but that will not be enough to deter many states from seeking the military advantages that autonomous weapons could offer.

While reaching an agreement in the consensus-based CCW will be difficult, negotiating a treaty outside the CCW will likely be impossible. Given the success of groups like Human Rights Watch in achieving the Mine Ban Treaty and CCM, an agreement controlling autonomous weapons in some way can be completed. What remains to be seen is whether such an agreement will be enough to change the trajectory we are currently on.

Lisa A. Bergstrom is a technology and security specialist in Berkeley, Calif.