The New Synergy Between Arms Control and Nuclear Command and Control

January/February 2020

By Geoffrey Forden

There are renewed worries that the U.S. nuclear command, control, and communications system (NC3) might be attacked with cyberweapons, potentially triggering a war.1 These concerns have been present since at least 1972 when the Air Force Computer Security Technology Planning Study Panel found that the “current systems provide no protection [against] a malicious user.”2

The situation has not improved in the intervening years, and a 2013 Department of Defense Science Board report stated that the Pentagon is “not prepared to defend against” cyberattacks and that the military could lose “trust in the information and ability to control U.S. systems and forces [including nuclear forces].”3 Clearly, something must be done to ensure that malicious actors cannot launch U.S. nuclear weapons or prevent them from being launched.

The situation has not improved in the intervening years, and a 2013 Department of Defense Science Board report stated that the Pentagon is “not prepared to defend against” cyberattacks and that the military could lose “trust in the information and ability to control U.S. systems and forces [including nuclear forces].”3 Clearly, something must be done to ensure that malicious actors cannot launch U.S. nuclear weapons or prevent them from being launched.

The risk of the United States possibly losing control over its nuclear forces has spurred many people to suggest a variety of solutions to address the problem. Former Secretary of the Air Force Mike Wynne has suggested returning to analog computers, something that was state of the art in the 1950s, to combat cyberattacks on NC3.4 Such computers use a set of discrete electronic circuit elements—resistors, capacitors, and solenoids—to process information, a so-called analog computer. Fixed in place, these circuits cannot be “hacked” by malicious, off-site users because their physical position or parameters would need to be changed. The philosophy behind such proposals is the realization that entry into any computer or communications system is inevitable, but an analog system would be less vulnerable to intrusions. Still, it is not clear how vulnerable such systems would be to malicious insiders.

Returning to analog computers, however, would have tremendous limitations on how much information could be processed and would almost certainly require two entirely separate systems: one for processing highly classified information gathered about real-world situations and a second, completely separate system for sending emergency action messages directing the targeting and launch of nuclear weapons. The lack of flexibility of such a system would likely limit the ability to tailor U.S. deterrence.

A better solution would entail using encryption tools known as physically unclonable functions (PUFs) that ensure protection against cyberthreats. This concept would assume that an actor will inevitably break into the U.S. NC3 and that its launch codes and the ability to use them must not depend on preventing hacking. Instead, the launch codes would not be stored in memory in any computer but instead rely on PUFs located at the National Command Authority to generate the encrypted codes when needed and only then. These would be interpreted only by the nuclear weapons themselves and only by using another physically unclonable device that generates the decryption key.

The use of these tools could have far-reaching and beneficial implications for arms control. The technology could enable a new generation of arms control agreements that could verify actual nuclear warheads, not just nuclear delivery vehicles, with greater levels of confidence that treaty parties are complying with treaty limits on deployed warheads and not diverting the real ones to covert storage.

Understanding Encryption Keys

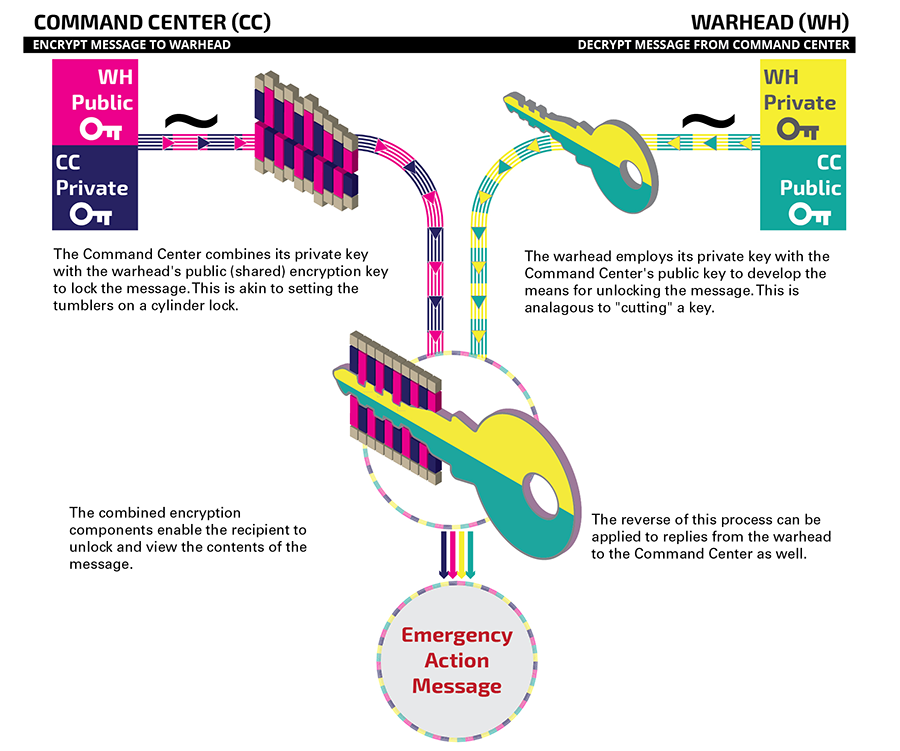

Today, state-of-the-art cybersecurity is based on Public Key Infrastructure. This protocol involves a pair of encryption keys: a private one not shared with anyone and a public one that can be distributed around the world. This procedure is standard today. The unique feature being introduced is that the nuclear command center is communicating directly with each nuclear weapon.

These keys are “matched” and must be used as pairs. For example, if the command center wants to send a message that only the nuclear weapon can read, it uses the public key generated by the weapon, which is matched with the weapon’s private encryption key that only the weapon knows. It is the weapon’s public key because it does not matter who sends messages that only it can decrypt. The command center uses the weapon’s encryption key to encode its message and send it to the weapon, which has the matched private key that can decrypt it. The command center wants to ensure that the nuclear weapon knows the message is from the commander and not from some hacker who has broken into the system, so it encrypts the message with its own private encryption key—no one else can “sign” the message, and the weapon and anyone else who sees it knows the message is genuine and coming from the command authority.

The usual public/private key encryption method has a major risk: if hackers or malicious insiders have invaded the system, then they might have been able to find both the command center’s and the weapon’s private encryption keys. With the weapon’s private key, they could read all the messages sent to the weapon and, stamping fake messages they write themselves with the commander’s private encryption key, fool the weapon into believing a message is from the commander when really it was from

an adversary.

One solution to this problem of hackers inside the command and control system is not to store the private encryption keys anywhere but to randomly generate the same unique and unguessable private key every time you need it. This private-key generation could be done with some physical process that cannot be controlled by computer. This would be immune to hackers inside the system and the insider threat as well, although a full vulnerability assessment would still need to be done.

How can the same, unique private encryption keys be generated each time one needs them? Fortunately, PUFs do just this. They are based on the fact that even precisely manufactured microelectronic circuits still have some variation that can be measured but not reproduced. A PUF that measures whether each of these variations is above some threshold can generate a string of 0s (below threshold) or 1s (above threshold) as long as needed, certainly several million digits.

When the command authority sends a message to a weapon using PUFs, they both generate their own private keys only when needed. Without storing the private keys somewhere on a computer, the hackers and insiders cannot gain access to them.

The arms control connection arises from the ability to verify individual weapons and the possibility of using this in future arms control treaties.

Cyberdangers

Nuclear weapons systems from early-warning systems to national command authority centers to the delivery systems themselves are increasingly dependent on computers. All of these NC3 system components of the United States and other nations become potential targets for adversaries during and immediately prior to war. They are also subject to cyberterrorist attacks at any time. One systematic way of thinking about these threats describes them by three general threat categories: misinformation introduced to the nuclear “infosphere” that might make command authorities unaware of a nuclear attack or believe there is one when there is not; cyberattacks intended to disable or destroy nuclear weapons, preventing them from being launched when the national authority wants them to be launched; and cyberattacks intended to launch nuclear weapons under false circumstances, such as issuing counterfeit launch orders.5

Some of these attacks, particularly planting misinformation into the nuclear infosphere, are more relevant for national command centers than the nuclear weapons themselves. As an illustration of a cyberattack in the misinformation category, a cyberattack on an air defense system intercepted signals sent from the radar to the command center and prevented the controllers from even knowing there was an attack underway. Others could be aimed at the launch systems themselves. It is these later cases where embedded NC3 becomes most important. If the warheads themselves generate public/private encryption keys and do not share the private key with other elements of the nuclear enterprise, the cybersecurity of launch control can be greatly enhanced. Not doing so continues to leave the command system for launching nuclear weapons susceptible to a number of cyberattacks that have been known to jump even “air gaps” such as those separating NC3 networks from the public internet.

Moving verification of the president’s launch orders into the weapon itself can be thought of as embedding NC3 into the nuclear weapon and conversely integrating the weapon into the NC3 architecture. In this implementation of using PUFs in NC3, the warhead uses the PUF to generate public and private encryption keys. The weapon’s public key is sent to the national command authority while the warhead generates the corresponding private key to decrypt messages each time the weapon is signaled. The weapon’s public key is then used by the command authority to encrypt its commands to that warhead.6 The national command authority further encrypts a message to the warhead with its private key, which the warhead authenticates by using the command authority’s public key.

The dual process of encrypting the message and authenticating it is analogous to making a lock whose tumblers are a combination of the receiver’s public key (encryption) and the sender’s private key (authentication). The message can only work when both pairs are present.

Questions have been raised about whether PUFs are truly unclonable. If someone has unlimited access to the PUF, they might be able to send it enough challenge-response pairs (CRPs) that another device could be constructed to respond in exactly the correct way. A PUF-protected NC3 system would therefore limit the number of times CRPs can be sent to the warhead. Once the limit is reached, the warhead would need to be returned to the warhead assembly facility to have its PUF replaced. Yet, if the right type of PUF is used, this limit can be set high enough so that this should almost never be necessary during normal use. In fact, the limit can be high enough so that only if the owner of the warhead tries to hack it, for example, while trying to break the arms control aspects and cheat on any treaty agreement, will the warhead have to be sent back.

It is theoretically possible to use the same PUF-based device for the NC3 and arms control mission, but it would be best to have two separate PUF-based devices in the nuclear weapon. The NC3-devoted PUF-based device would generate the public/private key pair and decode messages from the national command authority. The arms control-dedicated PUF would use the communications infrastructure internal to the weapon that the NC3 PUF-based device does, based on a large number of CRPs generated at the time the warhead was assembled Arms control inspectors would have a record of those CRPs, but not the owner of the weapon. Cheating by testing the PUF with enough queries will be prevented by breaking the arms control PUF after a fixed number of queries have been sent to it.

Separating these two functions into different devices completely removes any possibility that the treaty partner could access the weapon’s private launch code during the proposed treaty-mandated manufacturing process while still facilitating the arms control aspects.

Arms Control Opportunities: Warhead Accounting

The most likely direction for future arms control treaties is a move from limiting the number of delivery systems to restricting the actual number of warheads allowed. The basic idea behind these treaties is to prevent a treaty partner from possessing undeclared warheads or diverting declared warheads to a covert stockpile. Early concepts of a warhead-accountable treaty foresaw each treaty party periodically declaring the location of each nuclear weapon (mounted on a missile at a specific base or at a declared weapons storage depot). On a random but controlled basis, inspectors from a treaty partner country would come and “verify” that a randomly selected subset of warheads declared to be at that facility was actually present. Most of these concepts for verification involve taking radiation measurements of the warhead in a way that protects classified information about the weapon’s design.

Although these measurements can individually be done quickly, perhaps in a minute or less, they all require the selected nuclear weapons to be moved to a central location for verification. This significantly limits the number of nuclear weapons that can be verified during any visit. Furthermore, few if any of these concepts of operations envision verifying deployed warheads—an obvious gap if one is looking for undeclared weapons. The confidence of a treaty party that a significant number of warheads have not been diverted increases rapidly as more warheads are verified.

Much of the power of warhead accounting comes from authenticating warheads over a period of time and at a variety of facilities. In this way, confidence is built between treaty partners. A PUF-based electronic interrogation should make it possible to count a large number of warheads, including warheads mounted on missiles and even on alert, although not at sea. This would greatly increase the confidence that a treaty partner has not diverted any weapons. It will also be able to verify that any warhead present at the facility and not just those requested based on declarations is actually a warhead.

Implementation: How to Prevent the Warhead From Lying

An immediate objection to this plan is the possibility that a fake warhead might lie about its identity. A treaty partner might wish to do this because it wants to risk having two or more warheads with the same “identity,” thereby building up a larger arsenal than it has declared. In this case, the treaty partner might gamble that treaty inspectors are unlikely to discover the identically labeled warheads, but PUF-based accounting makes this a dangerous risk because so many more warheads can be verified.

A cheating treaty partner might also want to move a real warhead to a covert facility while sending a fake warhead to a dismantlement facility, again increasing the number of actual warheads in its arsenal above the agreed limit. Technical and procedural measures can prevent such lying. First, the PUF circuit, which would be developed and produced in cooperation with the treaty partners and therefore should be trusted by all,7 will not allow the host country as the owner of the warhead to interrogate the PUF enough times that it could be cloned.

A cheating treaty partner might also want to move a real warhead to a covert facility while sending a fake warhead to a dismantlement facility, again increasing the number of actual warheads in its arsenal above the agreed limit. Technical and procedural measures can prevent such lying. First, the PUF circuit, which would be developed and produced in cooperation with the treaty partners and therefore should be trusted by all,7 will not allow the host country as the owner of the warhead to interrogate the PUF enough times that it could be cloned.

To fully implement this, the PUF would need to be placed in a location in the warhead that would require shipment to a warhead assembly facility for replacement. This could be ensured by including a simple radiation detector that would report whether a threshold amount of radiation was met or surpassed, although further work must be done on this assurance.

When the PUF circuits are manufactured, their library of CRPs is recorded in a secure memory storage unit with the capability of interrogating each warhead and authenticating them by comparing their responses to this library. The information they contain is prevented from being disclosed or changed by only communicating with the inspection tool through information diodes. These units are stored in a tamperproof container and placed under dual control where both treaty partners must be present to access the device.

Finally, in any treaty that counts warheads, there must be perimeter monitoring, which checks all the containers going into and out of the warhead assembly facility without revealing any classified information other than that a warhead is present. When a new or refurbished warhead leaves the facility, the treaty partners monitoring the facility can authenticate each warhead using the PUF mechanisms. If there are doubts about whether a shipment leaving the facility contains a warhead, other steps can be taken, such radioactivity measurement or a visual search. This concept of perimeter monitoring is not new and was fully implemented under the Intermediate-Range Nuclear Forces Treaty when U.S. and Soviet inspectors monitored everything that left the U.S. and Soviet missile production plants at Magna and Votkinsk, respectively.

Conclusion

In the context of NC3, enabling nuclear weapons to create their own encryption keys with PUF-based devices provides a considerable number of advantages. First, the weapon provides its own private encryption key that does not have to be stored elsewhere. Second, the same unique private encryption key is generated each time it is needed and hence cannot be accessed at other times by unauthorized users. Third, this concept mitigates the danger of a malicious insider or a foreign or terrorist actor launching or preventing the launch of U.S. nuclear weapons even if they have gained access to the NC3 system. Fourth, this concept imposes no barriers to tailoring deterrence. Finally, this solution can be implemented and still have a human in the loop before launch.

For arms control purposes, PUF-based circuits could be used to monitor stored and, potentially, deployed nuclear warheads. This would improve the confidence of all treaty partners that nobody is secretly diverting warheads from declared stockpiles. Radiation-based warhead authentication cannot assess the same number of warheads as a PUF-based verification system, so the new system would provide a higher level of confidence in the treaty partner’s stockpile.

ENDNOTES

1. For example, see Erik Gartzke and Jon R. Lindsay, “Thermonuclear Cyberwar,” Journal of Cybersecurity, Vol. 3, No. 1 (2017): 37-48.

2. James P. Anderson, “Computer Security Technology Planning Study,” U.S. Air Force, ESD-TR-73-51, Vol. 1, October 1972, https://csrc.nist.gov/csrc/media/publications/conference-paper/1998/10/08/proceedings-of-the-21st-nissc-1998/documents/early-cs-papers/ande72a.pdf.

3. U.S Department of Defense Defense Science Board Task Force on Resilient Military Systems and the Advanced Cyber Threat, “Task Force Report,” January 2013, https://nsarchive2.gwu.edu/NSAEBB/NSAEBB424/docs/Cyber-081.pdf.

4. Mike Wynne, “Trump DepSecDef Prospect Urges Federal Cyber to Go Analog,” Breaking Defense, November 23, 2016.

5. Andrew Futter, Hacking the Bomb: Cyber Threats and Nuclear Weapons (Washington, DC: Georgetown University Press, 2018).

6. Once encrypted with the warhead’s public key, only the warhead’s private key can decode it. Hacking the command center and stealing the warhead’s encryption keys do not risk enabling the hacker launching nuclear weapons. Extreme care must be taken with the command center’s private key. It is possible that a physically unclonable function implementation of that might also be a good idea.

7. Previous projects have explored joint development projects. The Joint Verification Experiment, in which the two sides developed technical means of “calibrating” the seismic signals from underground nuclear tests at their respective test sites, is one such example. The Warhead Safety and Security Exchange project explored joint scientific research between Russian and U.S. nuclear weapons laboratories in which the countries worked together on safety and security of nuclear warheads during transportation and storage, safety issues associated with the aging of high explosives, and the assurance of the safety and security of nuclear warheads during dismantlement.

Geoffrey Forden is a physicist and principal member of the technical staff at the Cooperative Monitoring Center at Sandia National Laboratories. This article describes objective technical results and analysis. Any subjective views or opinions that might be expressed in the paper do not necessarily represent the views of the U.S. Department of Energy or the U.S. government. Sandia National Laboratories is a multimission laboratory managed and operated by National Technology and Engineering Solutions of Sandia, LLC, a wholly owned subsidiary of Honeywell International Inc., for the U.S. Department of Energy’s National Nuclear Security Administration under contract DE-NA0003525.