Pentagon Invests in AI, Issues Principles

May 2020

By Michael Klare

The Trump administration’s fiscal year 2021 Defense Department budget request prioritizes spending on artificial intelligence (AI) research and procurement of AI-enabled weapons systems, including unmanned autonomous ships, aircraft, and ground vehicles. Among these requests is $800 million for accelerated operations by the Joint Artificial Intelligence Center (JAIC), the Pentagon’s lead agency for applying AI for military purposes, and for Project Maven, a companion project that seeks to employ sophisticated algorithms in identifying potential military targets among masses of video footage. (See ACT, July/August 2018.)

In February, when presenting the Pentagon’s proposed budget, Deputy Secretary of Defense David L. Norquist indicated that some existing weapons programs would be cut or trimmed to free up funds for increased spending on sophisticated systems needed for what he called the “high end” wars of the future. Such engagements, Norquist suggested, will entail intense combat with the well-equipped forces of great power competitors, notably China and Russia.

In February, when presenting the Pentagon’s proposed budget, Deputy Secretary of Defense David L. Norquist indicated that some existing weapons programs would be cut or trimmed to free up funds for increased spending on sophisticated systems needed for what he called the “high end” wars of the future. Such engagements, Norquist suggested, will entail intense combat with the well-equipped forces of great power competitors, notably China and Russia.

If U.S. forces are to prevail in such encounters, he added, they will need to possess technological superiority over their rivals, which requires ever-increasing investment in “critical emerging technologies,” especially AI and autonomous weapons systems.

In advocating for these undertakings, senior Defense officials have consistently emphasized the need for speeding the weaponization of AI and autonomous weapons systems.

“Leadership in the military application of AI is critical to our national security,” Gen. John (“Jack”) Shanahan, the commander of JAIC, told reporters last August. “For that reason, I doubt I will ever be entirely satisfied that we’re moving fast enough when it comes to [the Defense Department’s] adoption of AI.” At the same time, however, top leaders insist that they are mindful of the legal and ethical challenges of weaponizing advanced technologies. “We are thinking deeply about the ethical, safe, and lawful use of AI,” Shanahan insisted.

The Pentagon’s recognition of the ethical dimensions of applying AI to military use stems from three sets of concerns that have emerged in recent years. The first encompasses fears that as human oversight diminishes, AI-enabled autonomous weapons systems could “go rogue” and engage in acts that violate international humanitarian law. (See ACT, March 2019.) The second arises from evidence that facial-identification algorithms and other AI-empowered systems often contain biases of some sort that could result in unfair or illegal outcomes. Finally, analysts worry that it can be very difficult to identify and trace the algorithmic flaws that might result in such outcomes.

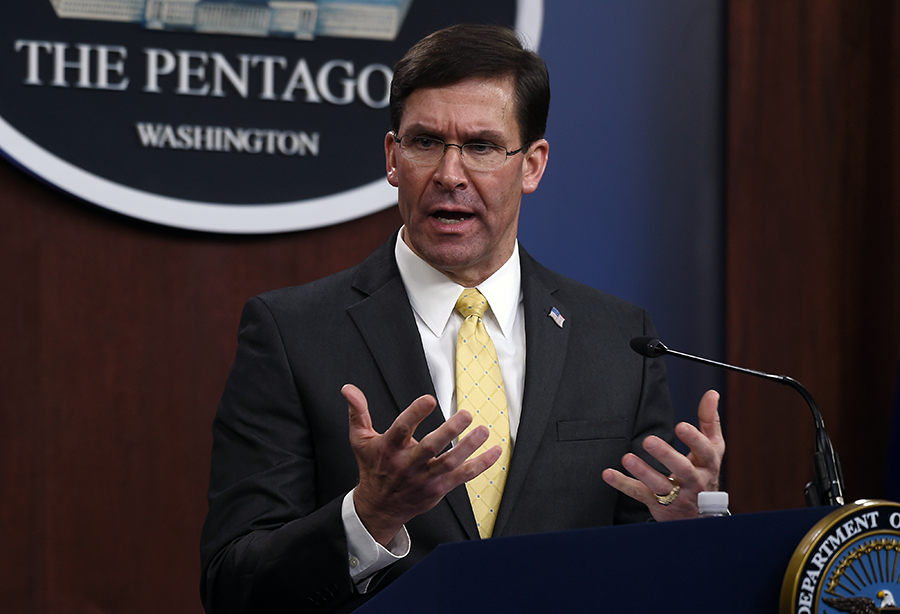

In recognition of these concerns, the Defense Department has taken a number of steps to demonstrate its awareness of the risks associated with hasty AI weaponization. In 2018, Defense Secretary Jim Mattis asked the Defense Innovation Board (DIB), a semiautonomous body reporting directly to senior Pentagon leadership, to develop a set of “ethical principles” for the military application of AI. After conducting several meetings with lawyers, computer scientists, and representatives of human rights organizations, the DIB delivered its proposed set of principles last Oct. 31 to Secretary Mark Esper. (See ACT, December 2019.) After an internal review, Esper’s office finally proclaimed its acceptance of the DIB formula Feb. 24.

In announcing his decision, Esper made it clear that the rapid weaponization of AI and other emerging technologies remains the department’s top priority. The United States, he declared, “must accelerate the adoption of AI and lead in its national security applications to maintain our strategic position [and] prevail on future battlefields.” At the same time, he noted, adoption of the new AI guidelines will buttress “the department’s commitment to upholding the highest ethical standards.”

Of the five ethical principles announced by Esper, four, covering the responsible, equitable, traceable, and reliable use of AI, address the risk of bias and the need for human supervisors to oversee every stage of AI weaponization and be able to identify and eliminate any flaws they detect. Only the final principle (governable) addresses the risk of unintended and possibly lethal action by AI-empowered autonomous systems. Under this key precept, human operators must possess “the ability to disengage or deactivate deployed systems that demonstrate unintended behavior.”

If fully implemented, the five principles adopted by Esper in February could go a long way toward reducing the risks identified by critics of rapid AI weaponization. But this will require that all involved military personnel be educated about these precepts and that contractors abide by them in developing new military systems. When and how this process will unfold remain largely unknown. Devising the five principles, Shanahan explained on Feb. 24, “was the easy part.”

“The real hard part,” he continued, is “understanding where those ethics principles need to be applied.” At this moment, that process has barely begun, but the acquisition of AI-enabled weapons systems seems to be advancing at top speed.